Research & Projects

Below are selected research projects and publications led by me, grouped by theme. For additional projects that I have collaborated on or supported within the lab, please visit the RoboPI Lab website.

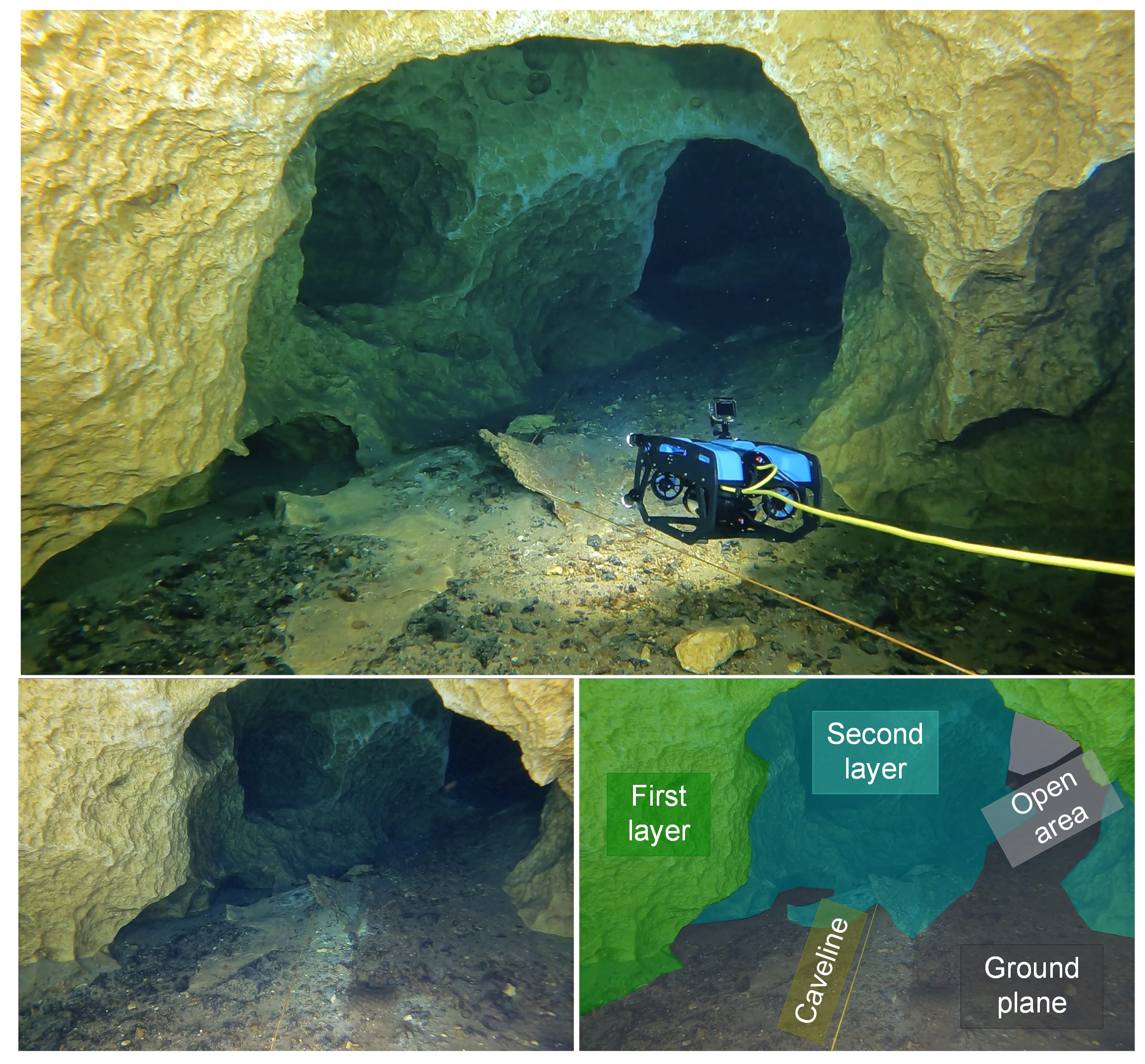

Underwater Cave Exploration with AUVs

CaveSeg Project

In this project, we present CaveSeg, the first visual learning pipeline for semantic segmentation and scene parsing for AUV navigation inside underwater caves. We address the problem of scarce annotated data by preparing a comprehensive dataset for semantic segmentation of underwater cave scenes. It contains pixel annotations for important navigation markers (e.g. caveline, arrows), obstacles (e.g. ground plane and overhead layers), scuba divers, and open areas for servoing. Moreover, we formulate a novel transformer-based model that is computationally light and offers near real-time execution in addition to achieving state-of-the-art performance. We explore the design choices and implications of semantic segmentation for visual servoing by AUVs inside underwater caves.

CavePI Project

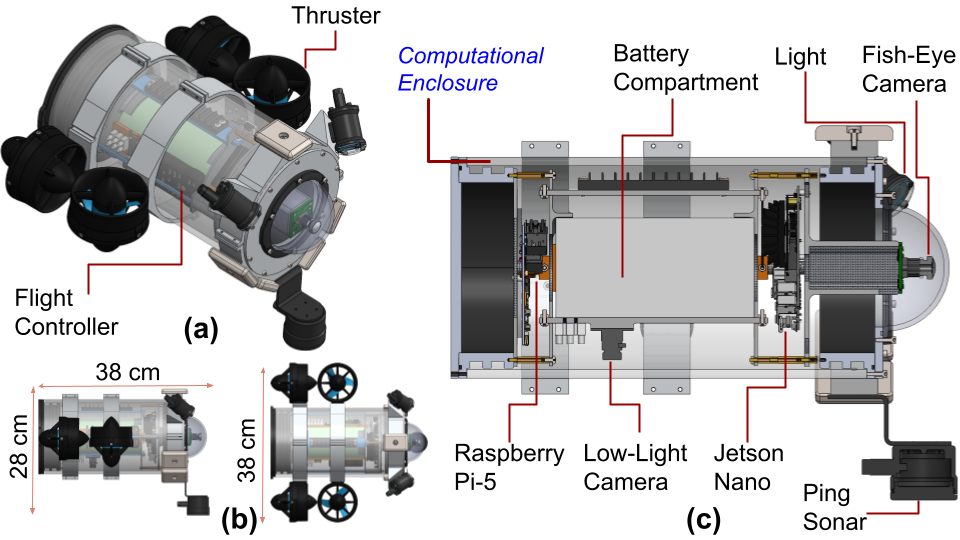

We developed a novel AUV (autonomous underwater vehicle) named CavePI for navigating underwater caves using semantic guidance from cavelines and other navigational markers. The compact design and 4-DOF (surge, heave, roll, and yaw) motion model enable safe traversal through narrow passages with minimal silt disturbance. Designed for single-person deployment, CavePI features a forward-facing camera for visual servoing and a downward-facing camera for caveline detection and tracking, effectively minimizing blind spots around the robot. A Ping sonar provides sparse range data to ensure robust obstacle avoidance and safe navigation within the caves. The computational framework is powered by two single-board computers: a Jetson Nano for perception, and a Raspberry Pi-5 for planning and control. We also present a digital twin of CavePI, built using ROS and simulated in an underwater environment via Gazebo, to support pre-mission planning and testing, providing a cost-effective platform for validating mission concepts.

Shared Autonomy & Embodied Teleoperation

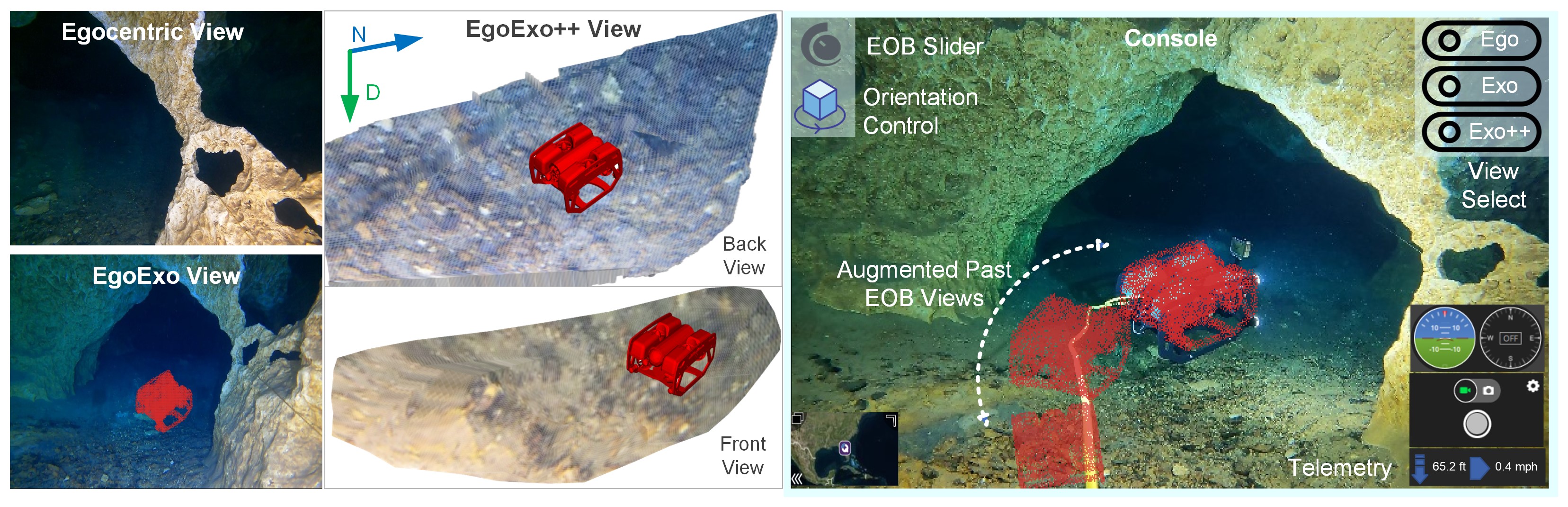

Ego-to-Exo Project

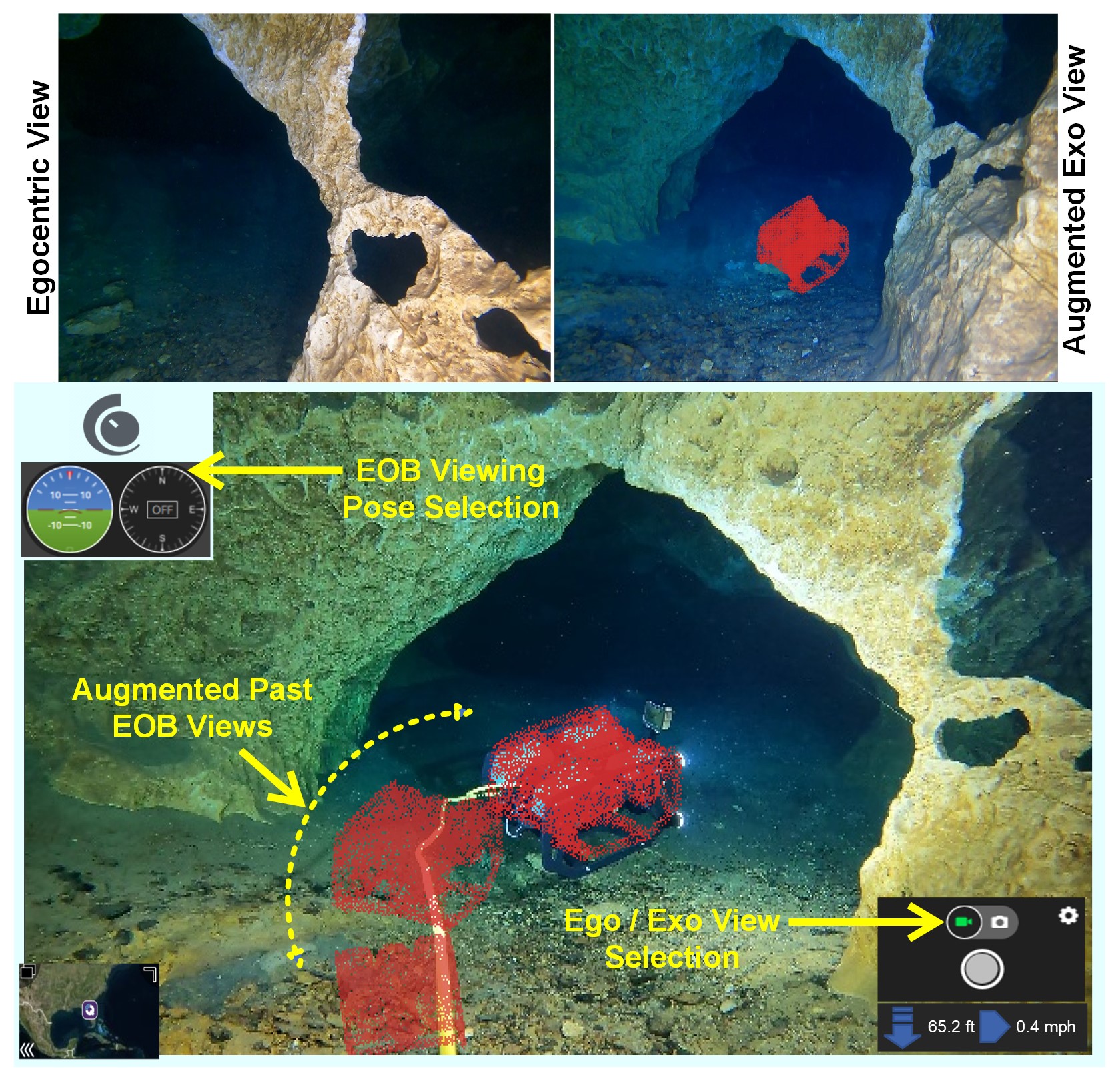

Underwater ROVs (Remotely Operated Vehicles) are unmanned submersible vehicles designed for exploring and operating in the depths of the ocean. Despite using high-end cameras, typical teleoperation engines based on first- person (egocentric) views limit a surface operator's ability to maneuver and navigate the ROV in complex deep-water missions. In this paper, we present an interactive teleoperation interface that (i) offers on-demand “third”-person (exocentric) visuals from past egocentric views, and (ii) facilitates enhanced peripheral information with augmented ROV pose in real-time. We achieve this by integrating a 3D geometry-based Ego-to-Exo view synthesis algorithm into a monocular SLAM system for accurate trajectory estimation. The proposed closed-form solution only uses past egocentric views from the ROV and a SLAM backbone for pose estimation, which makes it portable to existing ROV platforms. Unlike data-driven solutions, it is invariant to applications and waterbody-specific scenes.